Artificial Intelligence

Introduction

Artificial Intelligence (AI) has rapidly evolved from a futuristic concept to an integral part of our daily lives. From virtual assistants and recommendation systems to medical diagnostics and autonomous vehicles, AI is reshaping industries and societies. However, while the potential benefits are vast, AI also brings complex challenges and ethical dilemmas that must be addressed. AI is neither inherently good nor bad — it’s a powerful tool shaped by how we design, regulate, and use it. The challenge for society is to harness AI’s transformative potential while minimizing its risks.

The Pros and Cons of Artificial Intelligence

1. Efficiency and Automation

AI can perform dangerous tasks such as mining, deep-sea exploration, and bomb disposal, minimizing human exposure to hazards. AI can perform repetitive and data-intensive tasks faster and more accurately than humans. This boosts productivity in manufacturing, logistics, and even creative industries. Automation in the past threatened traditional roles in manufacturing, customer service, and transportation. AI can now perform tasks such as research, drafting and analysis. Is there a danger AI will displace jobs? Of course there is but in reality AI can augment work freeing people from routine duties so they can focus on creative, strategic, and interpersonal tasks.

2. Improved Decision-Making

AI systems can analyze vast datasets to uncover insights that humans might miss. In fields like healthcare, AI helps diagnose diseases earlier and more accurately. AI models can inherit biases present in their training data, resulting in discriminatory or unfair outcomes — especially in hiring, policing, or credit scoring. The key is to ensure diversity in training and regular human oversight.

3. Innovation and Creativity

Generative AI tools now assist in designing products, writing code, composing music, and even creating art. Overreliance on AI however may reduce critical thinking and problem-solving skills among humans. AI can be used maliciously to include deepfakes, automating cyberattacks, spreading misinformation and hallucinations. These risks can be abated by human oversight for critical decisions.

4. Enhanced Customer Experience

Chatbots, personalization algorithms, and predictive analytics make services more responsive and tailored to individual needs. However it should be borne in mind that AI systems often collect and analyse personal data, raising questions about surveillance, consent, and data ownership. This can be managed by effective controls and regulation.

Conclusion

The future of AI isn’t about avoiding its risks, but about transforming them into opportunities for a fairer, smarter, and more sustainable world. Through ethical design, inclusive policy, and human-centred thinking, we can ensure that AI serves humanity — not the other way around. AI should be used as a collaborative tool, not a replacement — a “co-pilot” that assists with specific task and enhances human decision-making.

As the saying goes “trust but verify”.

Regulation of Artificial Intelligence

Artificial Intelligence (AI) has enormous power to affect people’s lives for better and for worse. The European Union (EU) decided to regulate AI early and comprehensively because it wanted to protect people’s rights, ensure safety, and build trust in AI — while also setting a global standard for responsible innovation. Many jurisdictions still rely on existing laws such a data protection, privacy, consumer protection, product safety. Unlike the EU, the United States does not yet have a single, comprehensive federal law regulating Artificial Intelligence (AI), its approach is different — more decentralized, sector-based, and innovation-driven.

1. The EU AI Act

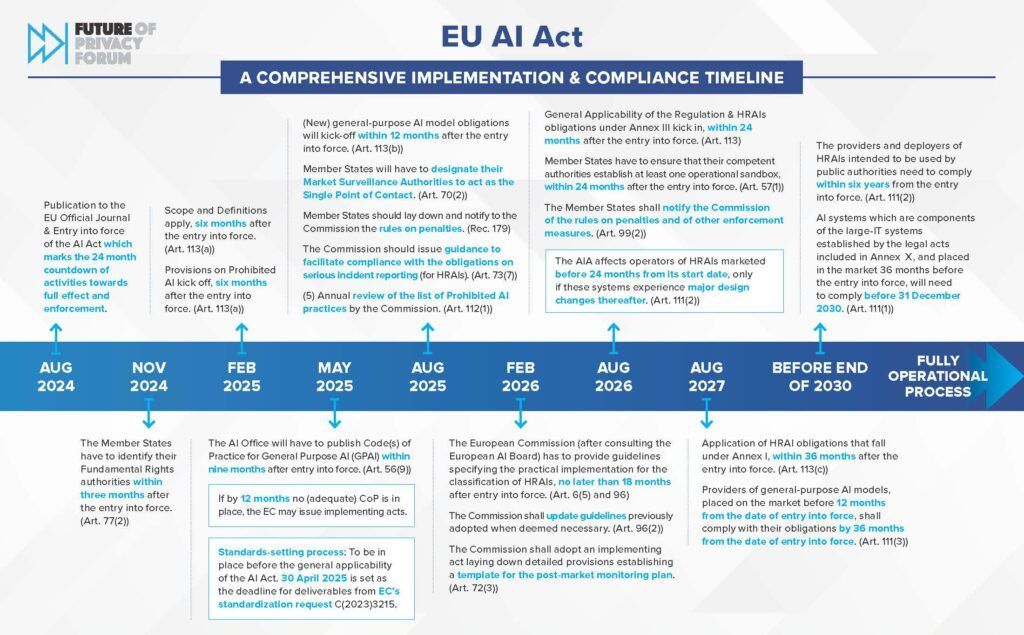

This regulation applies directly in each of the member-states. The Act entered into force in August 2024 and will be phased in over coming years (with full implementation of high risk rules around 2026-2027) in EU member states. Non-compliance can lead to substantial fines namely fines of up to €35 million or 7% of worldwide annual turnover for the most serious infringements.

The Act applies to providers, deployers, importers and distributors of AI systems in the EU (and in some cases those outside the EU whose systems are used within the EU). It also excludes certain uses (e.g., exclusively for military or defence, purely research or personal non-professional uses). Each Member states must designate competent authorities to monitor and enforce AI obligations.

2. How AI is regulated:

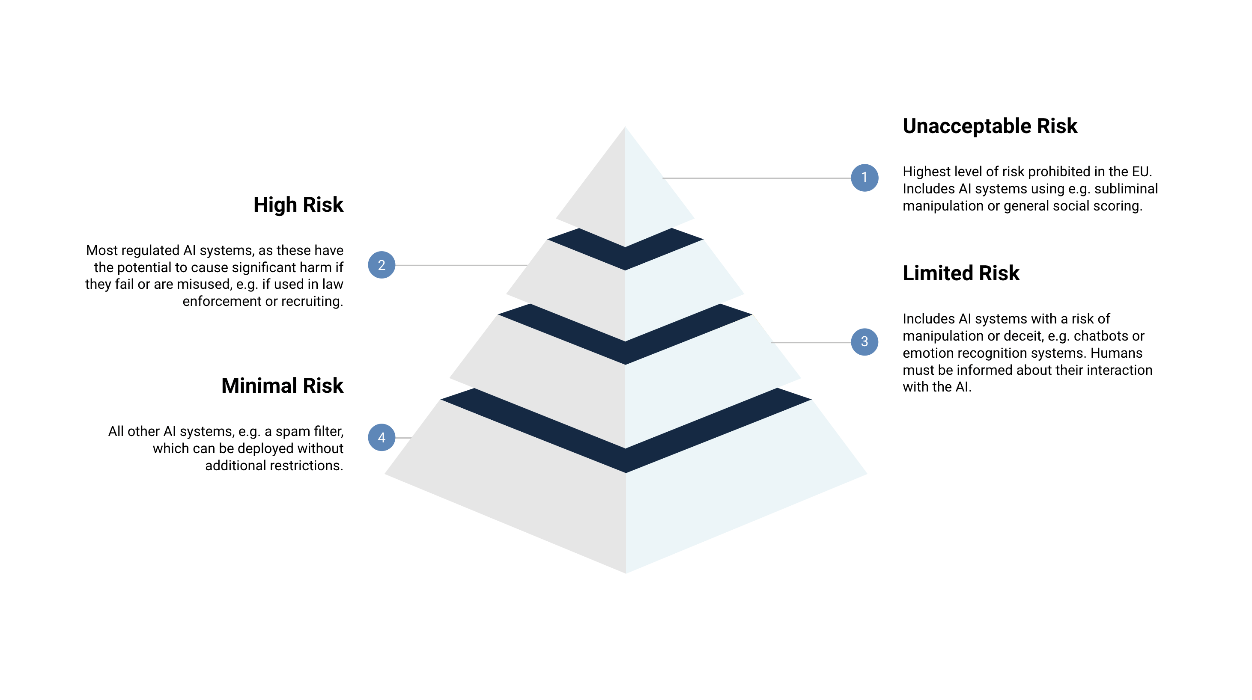

Whether in the EU or in other jurisdictions the key regulatory framework seek to address:

- Transparency & disclosure: When AI systems are used (especially those interacting with humans), users may need to be told they are interacting with an AI, how decisions are made, etc.

- Data governance & quality: Requirements around the data used to train AI, yo ensure fairness and accuracy.

- Human oversight and control: Especially for high-risk systems, ensuring humans remain in the loop and decisions are not fully automated without review.

- Safety, robustness & cybersecurity: Ensuring AI systems are safe and resilient.

- Accountability and auditability: Providers/deployers may be required to maintain records, perform risk assessments, allow audits.

- Prohibitions: Some uses of AI may be outright banned (e.g., manipulative AI systems, social scoring, certain biometric uses) depending on jurisdiction.

- Enforcement & sanctions: Regulatory authorities can impose fines, sanctions, require compliance measures.

- Innovation support / sandboxes: Recognising that over-regulation could stifle innovation, some frameworks provide “regulatory sandboxes” or phased implementations. (The EU Act contains such provisions.)

3. Conclusion

AI has the potential for significant societal impact (both positive and negative) — on employment, rights, safety, democracy, security. Without regulation, risks include bias, discrimination, lack of transparency, misuse of personal data, autonomous decision-making without accountability.

Regulation aims to strike a balance:

enable innovation while

protecting fundamental rights and

ensuring trust.